Airflow

Apache Airflow

Airflow is a platform to programmatically author, schedule and monitor workflows. Python is used to define workflows in Airflow. These workflows are designed as DAGs or Directed Acyclic Graphs.

DAG

As per Wikipedia’s definition of DAG - " A directed acyclic graph (DAG) is a directed graph with no directed cycles. That is, it consists of vertices and edges (also called arcs), with each edge directed from one vertex to another, such that there is no way to start at any vertex v and follow a consistently-directed sequence of edges that eventually loops back to v again. Equivalently, a DAG is a directed graph that has a topological ordering, a sequence of the vertices such that every edge is directed from earlier to later in the sequence. "

In the context of Airflow - DAG is a collection of all the tasks you want to run, by following the dependencies. If we relate it to the classic definition of DAG - vertices defines the tasks you want to execute and edges defines the relationship/dependencies.

Example DAG

Example Airflow DAG

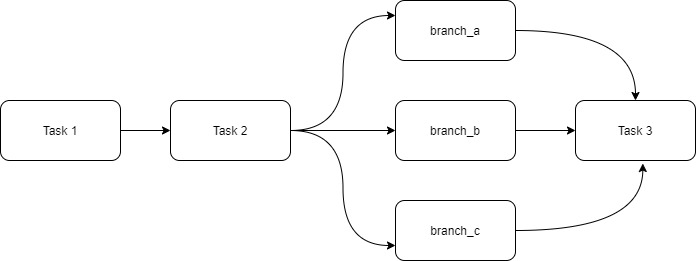

The example DAG above consists of 6 tasks. - DAG starts with Task1 - DAG will proceed to Task2 after successful completion of Task1 - Task2 here is a Branch operator (more on this later). Essentially, this is great for any kind of conditional logic to determine which dependency to follow. - Once the branch operator decides which path to follow it goes to branch_a, branch_b or branch_c. - Only after this is done, the DAG moves forward to Task3.

DAG Components

Airflow DAGs are made up of Tasks, and Tasks consists of hooks and operators.

Hooks

These are interfaces to external APIs such as databases, SalesForce etc.

Operators

Operators define what action needs to be performed. This is also called an atomic unit of DAG i.e. they describe a single task in a workflow. Usually no two tasks in a DAG will share information with each other.

There is an exhaustive list of operators which are available in Airflow. Documentation for the same can be found here

Some frequently used operators are - - Bash Operator - Email Operator - Python Operator

Other inportant properties of a task

- Each task has and owner and a unique identifier - known as task_id.

- Each executed task is known as a Task Instance (state of a task execution at a given point of time)

- Task Instances can have different states -

- running

- success

- failed

- skipped, etc.